Multi-view Method for Forest Fire Detection Based on Omni-Dimensional Dynamic Convolution and Focal-IoU

-

摘要:

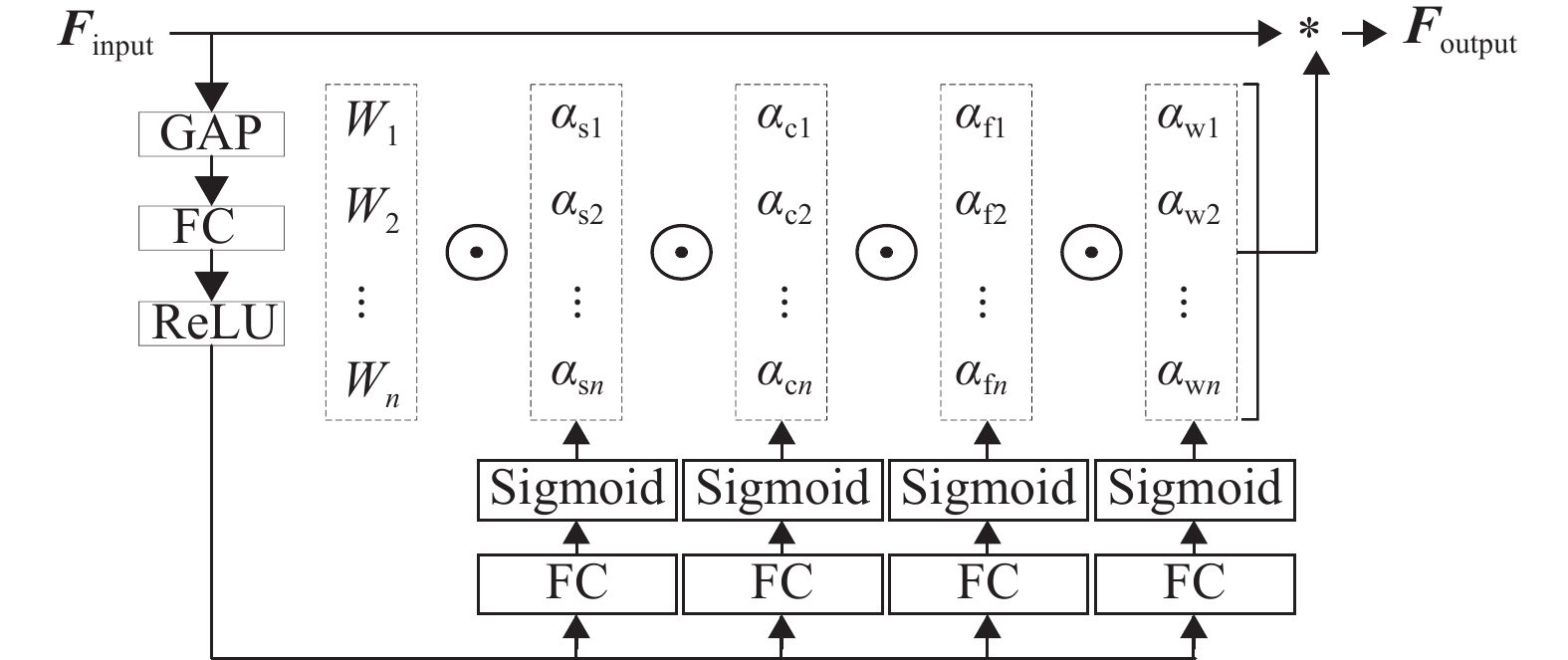

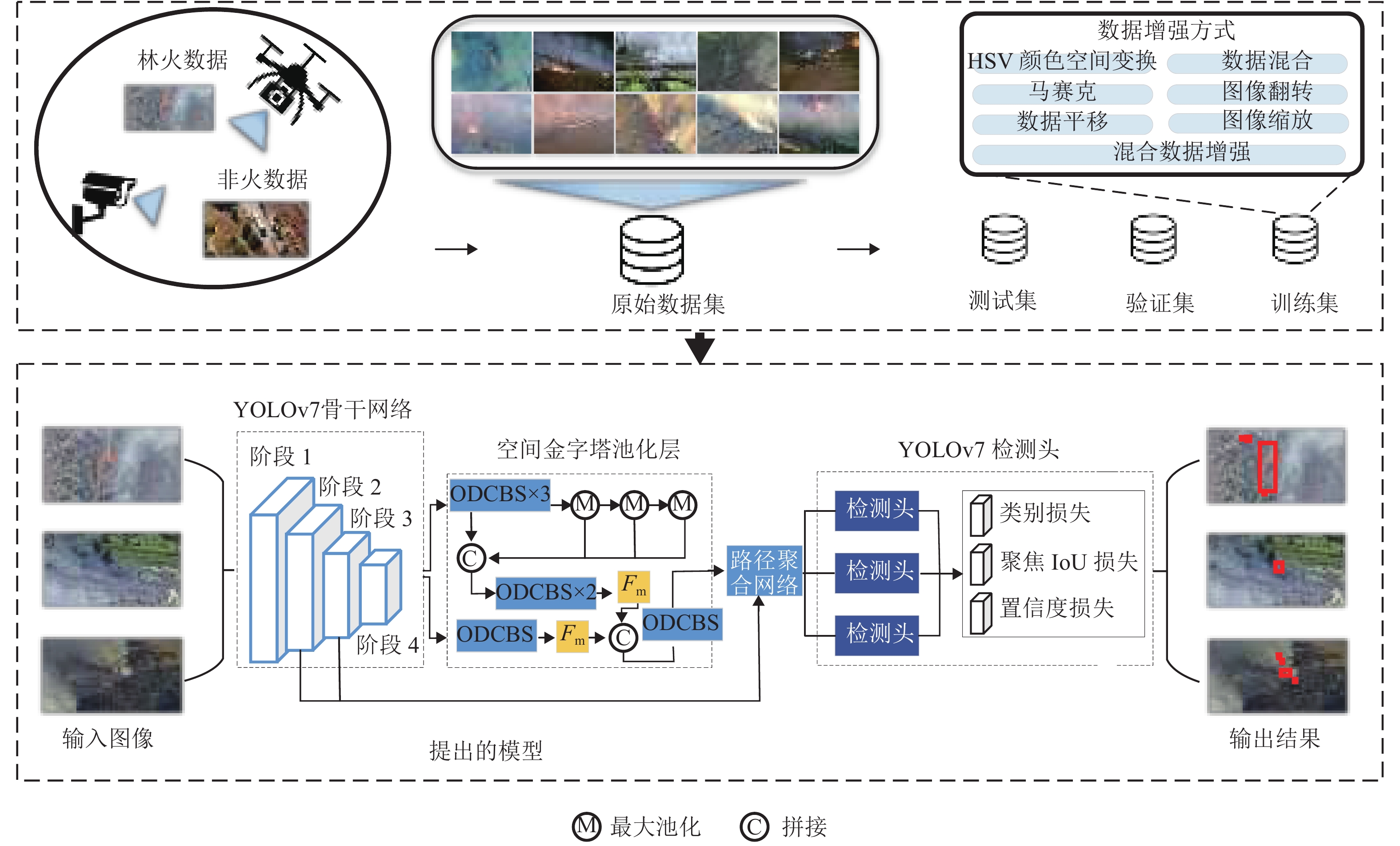

森林火点检测在林火应急救援中起着至关重要的作用. 鉴于现有模型在样本质量、多尺度问题以及多视角图像泛化能力方面存在不足,以YOLOv7为基础,提出一种森林火点目标检测方法FFD-YOLO (forest fire detection based on YOLO). 首先,构建多视角可见光图像森林火灾高点检测数据集FFHPV (forest fire of high point view),旨在增强模型对多视角火点知识的学习能力;其次,引入全维动态卷积,构建空间金字塔池化层(OD-SPP),以此提升模型针对多视角数据的火点特征提取能力;最后,引入具有动态非单调聚焦机制的边界框定位损失函数Wise-IoU (wise intersection over union),降低低质量数据对模型精度的影响,提高小目标火点的检测能力. 实验结果表明:所提出的FFD-YOLO方法相较于YOLOv7,精度提高3.9%,召回率提高3.7%,均值平均精度提高4.0%,F1分数提高0.038;同时,在与YOLOv5、YOLOv8、DDQ (dense distinct query)、DINO (detection transformer with improved denoising anchor boxes)、Faster R-CNN、Sparse R-CNN、Mask R-CNN、FCOS和YOLOX的对比实验中,FFD-YOLO具有最高的精度75.3%、召回率73.8%、均值平均精度77.6%和F1分数0.745,验证了该方法的可行性与有效性.

Abstract:Forest fire detection is crucial for forest fire emergency rescue. To address the shortcomings of existing models in sample quality, multi-scale issues, and generalization capability across multi-view images, a method for forest fire detection based on YOLO (FFD-YOLO) was proposed. First, a multi-view visible light image dataset for detecting forest fire from of high point view (FFHPV) was constructed to enhance the model’s learning capability for multi-view fire information. Second, omni-dimensional dynamic convolution was introduced to develop an omni-dimensional spatial pyramid pooling (OD-SPP) to improve the model’s feature extraction capacity for multi-view fire characteristics. Finally, a wise intersection over union (Wise-IoU) loss function with a dynamic non-monotonic focusing mechanism was introduced to mitigate the impact of low-quality data on model precision and enhance small-target fire detection. Experimental results have demonstrated that FFD-YOLO increased precision by 3.9%, recall by 3.7%, mean average precision (mAP) by 4.0%, and F1-score by 0.038 compared to YOLOv7. In comparative experiments with YOLOv5, YOLOv8, dense distinct query (DDQ), detection transformer with improved denoising anchor boxes (DINO), Faster R-CNN, Sparse R-CNN, Mask R-CNN, FCOS, and YOLOX, FFD-YOLO attained 75.3% precision, 73.8% recall, 77.6% mAP, and 0.745 F1-score, validating its feasibility and effectiveness.

-

表 1 数据集中各类型数据数量统计

Table 1. Statistics of number of each type of data in dataset

类型 数量/张 含火图片数据 5003 非火图片数据 1043 总标注个数 20176 小目标个数(32×32) 11177 水平视角图片数据 1286 垂直视角图片数据 1512 倾斜视角图片数据 3248 表 2 提出的方法与其他目标检测器在FFHPV数据集上的检测结果指标对比

Table 2. Comparison of detection result metrics in FFHPV dataset between proposed method and other target detectors

方法 P/% R/% mAP50/% F1 FLOPs/(×109次) Params/(×106个) FPS/(帧·s−1) YOLOv5 74.2 71.5 74.5 0.728 49.0 21.2 83.2 YOLOv7 71.4 70.1 73.6 0.707 104.7 36.9 56.7 YOLOv8 74.3 67.4 74.1 0.706 78.9 25.9 60.6 DDQ 61.1 55.7 61.3 0.582 200.7 47.2 24.7 DINO 60.3 52.6 60.0 0.561 279.0 47.0 22.0 Faster R-CNN 57.8 33.8 57.5 0.426 207.0 40.3 21.4 Sparse R-CNN 51.7 43.4 51.8 0.471 160.2 45.7 32.5 Mask R-CNN 51.2 35.9 51.6 0.422 113.0 44.4 52.1 FCOS 47.5 26.9 47.7 0.343 107.5 90.2 51.8 YOLOX 63.2 58.6 63.8 0.608 73.8 25.3 58.4 FFD-YOLO 75.3 73.8 77.6 0.745 104.5 37.6 56.8 表 3 FFD-YOLO与YOLOv5、YOLOv7和YOLOv8预测效果的可视化效果对比

Table 3. Visualization effect comparisons of FFD-YOLO and YOLOv5, YOLOv7, and YOLOv8

序号 原始图像 YOLOv5 YOLOv7 YOLOv8 FFD-YOLO 1

2

3

4

表 4 FFD-YOLO与YOLOv5、YOLOv7和YOLOv8在小目标火点检测上的可视化效果对比

Table 4. Visualization effect comparisons on small-target fire detection of FFD-YOLO and YOLOv5, YOLOv7, and YOLOv8

序号 原始图像 YOLOv5 YOLOv7 YOLOv8 FFD-YOLO 1

2

3

4

表 5 在YOLOv7框架上分别添加OD-SPP模块和Wise-IoU后在FFHPV数据集上的检测指标对比

Table 5. Comparison of detection metrics in FFHPV dataset with addition of OD-SPP module and Wise-IoU on YOLOv7 framework

添加模块 P/% R/% mAP50/% F1 FPS/

(帧·s−1)无添加 71.4 70.1 73.6 0.707 56.7 OD-SPP 74.0 74.4 76.1 0.742 57.2 Wise-IoU 72.5 70.8 74.3 0.716 55.9 OD-SPP+Wise-IoU 75.3 73.8 77.6 0.745 56.8 -

[1] 贾一鸣, 张长春, 胡春鹤, 等. 基于少样本学习的森林火灾烟雾检测方法[J]. 北京林业大学学报, 2023, 45(9): 137-146. doi: 10.12171/j.1000-1522.20230044JIA Yiming, ZHANG Changchun, HU Chunhe, et al. Forest fire smoke detection method based on few-shot learning[J]. Journal of Beijing Forestry University, 2023, 45(9): 137-146. doi: 10.12171/j.1000-1522.20230044 [2] 周浪, 樊坤, 瞿华, 等. 基于Sparse-DenseNet模型的森林火灾识别研究[J]. 北京林业大学学报, 2020, 42(10): 36-44.ZHOU Lang, FAN Kun, QU Hua, et al. Forest fire identification based on sparse-DenseNet model[J]. Journal of Beijing Forestry University, 2020, 42(10): 36-44. [3] 段祝庚, 肖化顺. 基于光束法双像解析的森林火点定位技术[J]. 西南交通大学学报, 2013, 48(5): 870-877.DUAN Zhugeng, XIAO Huashun. Forest fire-point location based on bundle adjustment of double images[J]. Journal of Southwest Jiaotong University, 2013, 48(5): 870-877. [4] 孙福洋, 李晓松, 李增元, 等. 近实时中高空间分辨率森林火灾监测系统展望[J]. 遥感学报, 2020, 24(5): 543-549.SUN Fuyang, LI Xiaosong, LI Zengyuan, et al. Near-real-time forest fire monitoring system with medium and high spatial resolutions[J]. Journal of Remote Sensing, 2020, 24(5): 543-549. [5] CELIK T. Fast and efficient method for fire detection using image processing[J]. ETRI Journal, 2010, 32(6): 881-890. doi: 10.4218/etrij.10.0109.0695 [6] LI T, YE M, PANG F, et al. An efficient fire detection method based on orientation feature[J]. International Journal of Control, Automation and Systems, 2013, 11(5): 1038-1045. doi: 10.1007/s12555-012-9314-y [7] 李泽琛, 李恒超, 胡文帅, 等. 多尺度注意力学习的Faster R-CNN口罩人脸检测模型[J]. 西南交通大学学报, 2021, 56(5): 1002-1010.LI Zechen, LI Hengchao, HU Wenshuai, et al. Masked face detection model based on multi-scale attention-driven faster R-CNN[J]. Journal of Southwest Jiaotong University, 2021, 56(5): 1002-1010. [8] 潘磊, 郭宇诗, 李恒超, 等. 面向舰船目标检测的SAR图像数据PCGAN生成方法[J]. 西南交通大学学报, 2024, 59(3): 547-555.PAN Lei, GUO Yushi, LI Hengchao, et al. SAR image generation method via PCGAN for ship detection[J]. Journal of Southwest Jiaotong University, 2024, 59(3): 547-555. [9] 华泽玺, 施会斌, 罗彦, 等. 基于轻量级YOLO-v4模型的变电站数字仪表检测识别[J]. 西南交通大学学报, 2024, 59(1): 70-80.HUA Zexi, SHI Huibin, LUO Yan, et al. Detection and recognition of digital instruments based on lightweight YOLO-v4 model at substations[J]. Journal of Southwest Jiaotong University, 2024, 59(1): 70-80. [10] BARMPOUTIS P, DIMITROPOULOS K, KAZA K, et al. Fire detection from images using faster R-CNN and multidimensional texture analysis[C]//ICASSP 2019−2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Brighton: IEEE, 2019: 8301-8305. [11] 回天, 哈力旦•阿布都热依木, 杜晗. 结合Faster R-CNN的多类型火焰检测[J]. 中国图象图形学报, 2019, 24(1): 73-83.HUI Tian, HALIDAN Abudureyimu, DU Han. Multi-type flame detection combined with Faster R-CNN[J]. Journal of Image and Graphics, 2019, 24(1): 73-83. [12] 朱军, 张天奕, 谢亚坤, 等. 顾及小目标特征的视频人流量智能统计方法[J]. 西南交通大学学报, 2022, 57(4): 705-712, 736. doi: 10.3969/j.issn.0258-2724.20200425ZHU Jun, ZHANG Tianyi, XIE Yakun, et al. Intelligent statistic method for video pedestrian flow considering small object features[J]. Journal of Southwest Jiaotong University, 2022, 57(4): 705-712,736. doi: 10.3969/j.issn.0258-2724.20200425 [13] ZHAO L, ZHI L Q, ZHAO C, et al. Fire-YOLO: a small target object detection method for fire inspection[J]. Sustainability, 2022, 14(9): 4930. doi: 10.3390/su14094930 [14] MSEDDI W S, GHALI R, JMAL M, et al. Fire detection and segmentation using YOLOv5 and U-NET[C]//2021 29th European Signal Processing Conference (EUSIPCO). Dublin: IEEE, 2021: 741-745. [15] LIN J, LIN H F, WANG F. STPM_SAHI: a small-target forest fire detection model based on swin transformer and slicing aided hyper inference[J]. Forests, 2022, 13(10): 1603. doi: 10.3390/f13101603 [16] 秦瑞, 张为. 一种无锚框结构的多尺度火灾检测算法[J]. 西安电子科技大学学报, 2022, 49(6): 111-119.QIN Rui, ZHANG Wei. Multi-scale fire detection algorithm with an anchor free structure[J]. Journal of Xidian University, 2022, 49(6): 111-119. [17] 唐丹妮. 面向森林火灾检测的深度学习方法研究[D]. 西安: 西安理工大学, 2021. [18] LI Y M, ZHANG W, LIU Y Y, et al. An efficient fire and smoke detection algorithm based on an end-to-end structured network[J]. Engineering Applications of Artificial Intelligence, 2022, 116: 105492. doi: 10.1016/j.engappai.2022.105492 [19] YU J H, JIANG Y N, WANG Z Y, et al. UnitBox: an advanced object detection network[C]//Proceedings of the 24th ACM International Conference on Multimedia. Amsterdam: ACM, 2016: 516-520. [20] TONG Z J, CHEN Y H, XU Z W, et al. Wise-IoU: bounding box regression loss with dynamic focusing mechanism[EB/OL]. (2023-04-08)[2024-05-08]. http://arxiv.org/abs/2301.10051. [21] LI C, ZHOU A J, YAO A B. Omni-dimensional dynamic convolution[EB/OL]. (2022-09-16)[2024-05-08]. http://arxiv.org/abs/2209.07947. [22] 杨艳春, 闫岩, 王可. 基于注意力机制与光照感知网络的红外与可见光图像融合[J]. 西南交通大学学报, 2024, 59(5): 1204-1214. doi: 10.3969/j.issn.0258-2724.20230529YANG Yanchun, YAN Yan, WANG Ke. Infrared and visible image fusion based on attention mechanism and illumination-aware network[J]. Journal of Southwest Jiaotong University, 2024, 59(5): 1204-1214. doi: 10.3969/j.issn.0258-2724.20230529 [23] HE K M, ZHANG X Y, REN S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[C]//Computer Vision—ECCV 2014. Cham: Springer, 2014: 346-361. [24] REZATOFIGHI H, TSOI N, GWAK J, et al. Generalized intersection over union: a metric and a loss for bounding box regression[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach: IEEE, 2019: 658-666. [25] ZHENG Z H, WANG P, LIU W, et al. Distance-IoU loss: faster and better learning for bounding box regression[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 12993-13000. doi: 10.1609/aaai.v34i07.6999 [26] GEVORGYAN Z. SIoU loss: more powerful learning for bounding box regression[EB/OL]. (2022-05-25)[2024-05-08]. http://arxiv.org/abs/2205.12740. [27] PADILLA R, NETTO S L, DA SILVA E A B. A survey on performance metrics for object-detection algorithms[C]//2020 International Conference on Systems, Signals and Image Processing (IWSSIP). Niterói: IEEE, 2020: 237-242. [28] ZHANG S L, WANG X J, WANG J Q, et al. Dense distinct query for end-to-end object detection[C]//2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver: IEEE, 2023: 7329-7338. [29] ZHANG H, LI F, LIU S L, et al. DINO: DETR with improved denoising anchor boxes for end-to-end object detection[EB/OL]. (2022-07-01)[2024-05-08]. http://arxiv.org/abs/2203.03605. [30] REN S Q, HE K M, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031 [31] SUN P Z, ZHANG R F, JIANG Y, et al. Sparse R-CNN: end-to-end object detection with learnable proposals[C]//2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville: IEEE, 2021: 14449-14458. [32] HE K M, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]//2017 IEEE International Conference on Computer Vision (ICCV). Venice: IEEE, 2017: 2980-2988. [33] TIAN Z, SHEN C H, CHEN H, et al. FCOS: fully convolutional one-stage object detection[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV). Seoul: IEEE, 2019: 9626-9635. [34] GE Z, LIU S T, WANG F, et al. YOLOX: exceeding YOLO series in 2021[EB/OL]. (2021-08-06)[2024-05-08] . http://arxiv.org/abs/2107.08430. -

下载:

下载: