Dense Crowd Counting Network Based on Multi-scale Perception

-

摘要:

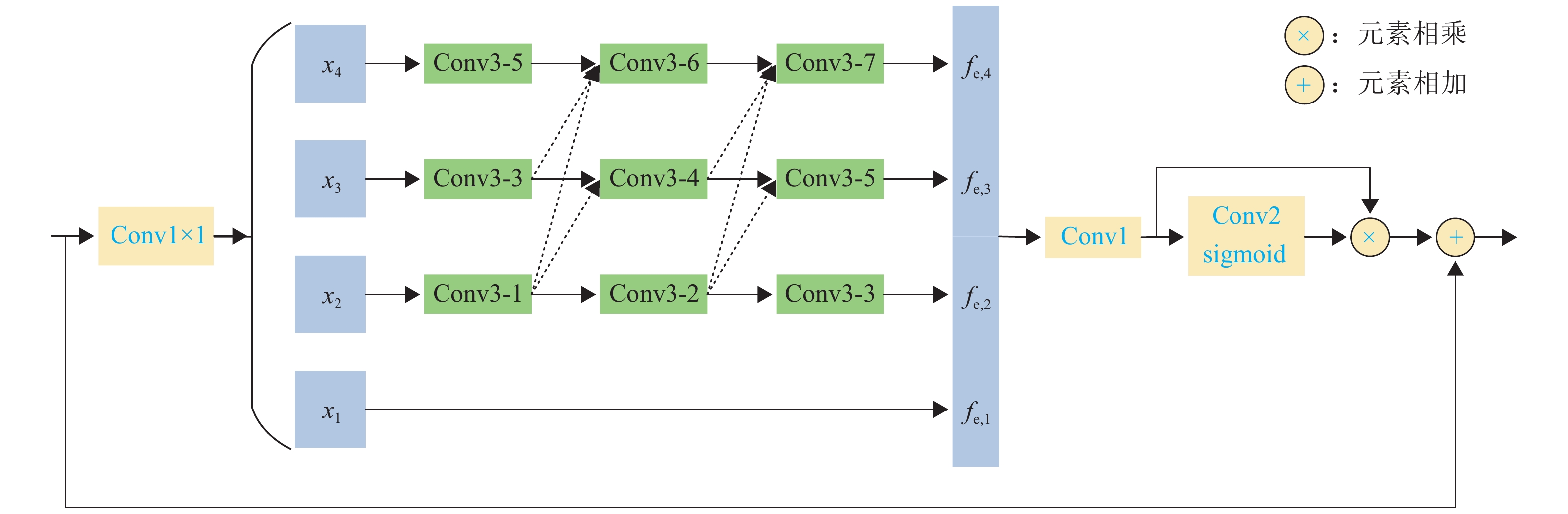

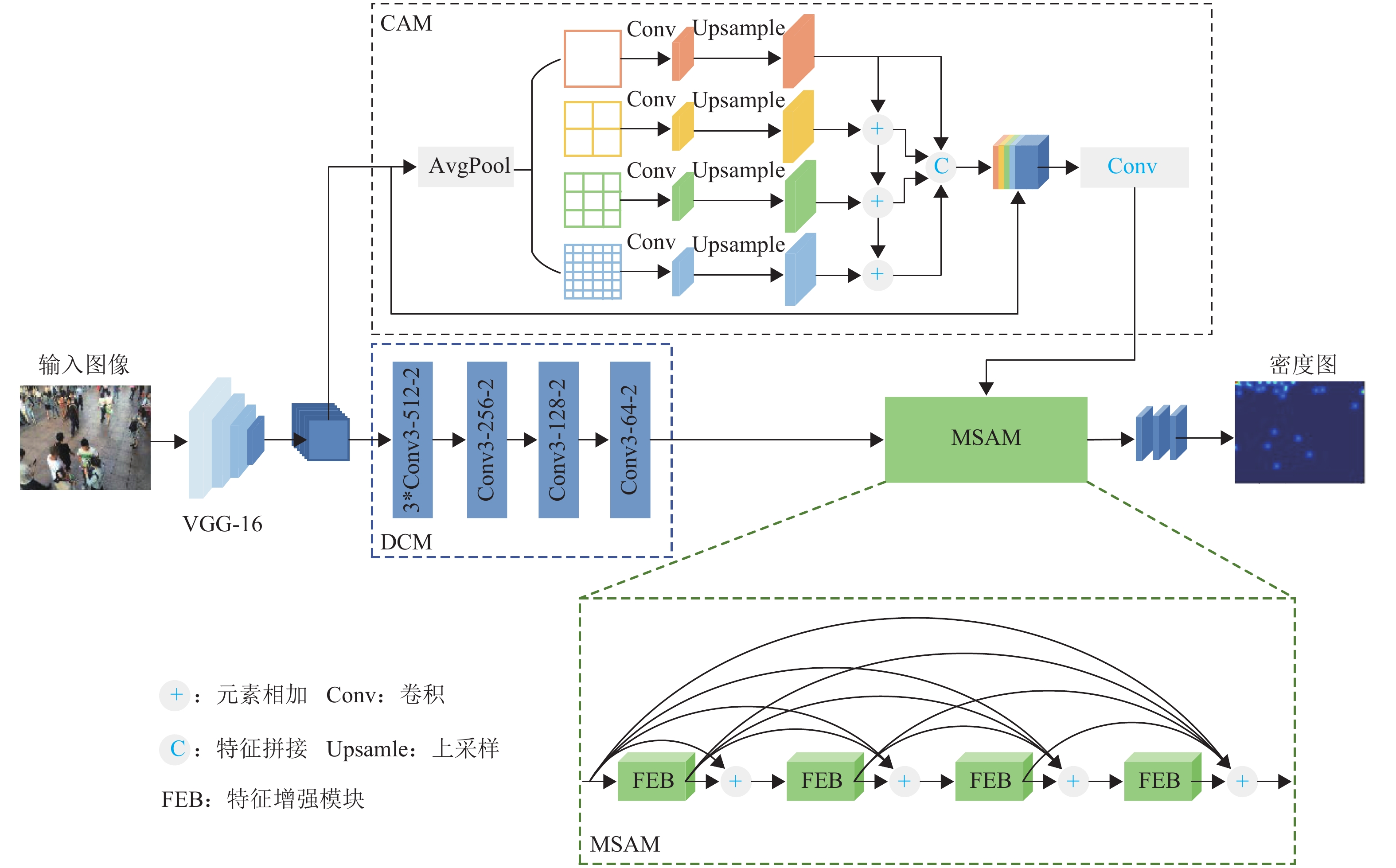

针对密集人群场景存在的目标尺度多样、人群大尺度变化等问题,提出一种基于多尺度感知的密集人群计数网络. 首先,考虑到小尺度目标在图像中占比较大,以VGG-16 (visual geometry group 2016)网络为基础,引入空洞卷积模块,以挖掘图像细节信息;其次,为充分利用目标多尺度信息,构建新的上下文感知模块,以提取不同尺度之间的对比特征;最后,考虑到目标尺度连续变化的特点,设计多尺度特征聚合模块,提高密集尺度采样范围与多尺度信息交互,从而提升网络性能. 实验结果显示:在ShangHai Tech (Part_A/Part_B)和UCF_CC_50数据集上,本文方法的平均绝对误差(mean absolute error,MAE)分别为62.5、6.9、156.5,均方根误差(root mean square error,RMSE)分别为95.7、11.0、223.3;相较于最优对比方法,在UCF_QNRF数据集上的MAE和RMSE分别降低1.1%和4.3%,在NWPU数据集上分别降低8.7%和13.9%.

Abstract:A dense crowd counting network based on multi-scale perception was proposed to solve the problems of diverse target scales and large-scale changes of crowds in dense crowd scenes. Firstly, since the small-scale targets account for a relatively large proportion of the images, a dilated convolution module was introduced based on the visual geometry group 2016 (VGG-16) network to mine the detailed information in the images. Then, by utilizing the multi-scale information of the target, a novel context-aware module was designed to extract the contrast features between different scales. Finally, In view of the continuous change of target scales, the multi-scale feature aggregation module was designed to improve the sampling range of dense scales, enhance the interaction of multi-scale information, and thus improve the model performance. The experimental results show that mean absolute errors (MAEs) of the proposed method are 62.5, 6.9, and 156.5, and the root mean square errors (RMSEs) are 95.7, 11.0, and 223.3 on ShangHai Tech (Part_A/Part_B) and UCF_CC_50 datasets, respectively. Compared with the optimal method of comparison model, the MAE and RMSE are reduced by 1.1% and 4.3% on the UCF_QNRF dataset and by 8.7% and 13.9% on the NWPU dataset.

-

Key words:

- crowd density estimation /

- multi-scale aggregation /

- dilated convolution /

- density map

-

表 1 不同方法在Shanghai Tech、UCF_CC_50、UCF_QNRF、NWPU数据集上的对比结果

Table 1. Comparison results of different methods on Shanghai Tech, UCF_CC_50, UCF_QNRF, and NWPU datasets

模型 Shanghai Tech Part_A Shanghai Tech Part_B UCF_ CC_50 UCF_ QNRF NWPU MAE RMSE MAE RMSE MAE RMSE MAE RMSE MAE RMSE MCNN[7] 110.2 173.2 26.4 41.3 377.6 509.1 277.0 426.0 218.5 700.6 CSRNet[10] 68.2 115.0 10.6 16.0 266.1 397.5 120.3 208.5 104.8 433.4 PDD-CNN[29] 64.7 99.1 8.8 14.3 205.4 311.7 115.3 190.2 TEDNet[18] 64.2 109.1 8.2 12.8 249.4 354.5 113.0 188.0 KDMG[30] 63.8 99.2 7.8 12.7 99.5 173.0 100.5 415.5 BL[31] 62.8 101.8 7.7 12.7 229.3 308.2 88.7 154.8 93.6 470.3 CAN[20] 62.3 100.0 7.8 12.2 212.2 243.7 107.0 183.0 93.5 489.9 MCANet[32] 60.1 100.2 6.8 11.0 181.3 258.6 100.8 185.9 SC2Net[33] 58.9 97.7 6.9 11.4 209.4 286.3 98.5 174.5 89.7 348.9 MSPNet 62.5 95.7 6.9 11.0 156.5 223.3 87.7 148.2 81.9 300.3 表 2 CAM结构的消融实验结果

Table 2. Ablation experiments of CAM structure

方法 MAE RMSE 本文+PPM 63.6 105.4 本文+CAM 62.5 95.7 表 3 模块结构的消融实验结果

Table 3. Ablation experiments of different module structures

方法 MAE RMSE CAM 68.2 118.8 DCM 66.2 113.0 DCM+CAM 64.9 109.8 CAM+MSAM 65.5 111.4 DCM+MSAM 64.0 111.5 DCM+CAM+MSAM 62.5 95.7 表 4 FEB层选择消融实验结果

Table 4. Ablation experiments of number selection for FEB

FEB 层数/层 MAE RMSE 0 64.9 109.8 2 63.5 103.4 4 62.5 95.7 6 67.1 115.8 -

[1] FAN Z Z, ZHANG H, ZHANG Z, et al. A survey of crowd counting and density estimation based on convolutional neural network[J]. Neurocomputing, 2022, 472: 224-251. doi: 10.1016/j.neucom.2021.02.103 [2] 余鹰,朱慧琳,钱进,等. 基于深度学习的人群计数研究综述[J]. 计算机研究与发展,2021,58(12): 2724-2747. doi: 10.7544/issn1000-1239.2021.20200699YU Ying, ZHU Huilin, QIAN Jin, et al. Survey on deep learning based crowd counting[J]. Journal of Computer Research and Development, 2021, 58(12): 2724-2747. doi: 10.7544/issn1000-1239.2021.20200699 [3] TOPKAYA I S, ERDOGAN H, PORIKLI F. Counting people by clustering person detector outputs[C]//2014 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). Seoul: IEEE, 2014: 313-318. [4] RYAN D, DENMAN S, SRIDHARAN S, et al. An evaluation of crowd counting methods, features and regression models[J]. Computer Vision and Image Understanding, 2015, 130: 1-17. doi: 10.1016/j.cviu.2014.07.008 [5] LEMPITSKY V, ZISSERMAN A. Learning to count objects in images[C]//Proceedings of the 23rd International Conference on Neural Information Processing Systems. New York: ACM, 2010: 1324-1332. [6] WANG C, ZHANG H, YANG L, et al. Deep people counting in extremely dense crowds[C]//Proceedings of the 23rd ACM international conference on Multimedia. Brisbane: ACM, 2015: 1299-1302. [7] ZHANG Y Y, ZHOU D S, CHEN S Q, et al. Single-image crowd counting via multi-column convolutional neural network[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas: IEEE, 2016: 589-597. [8] SINDAGI V A, PATEL V M. Generating high-quality crowd density maps using contextual pyramid CNNs[C]//2017 IEEE International Conference on Computer Vision (ICCV). Venice: IEEE, 2017: 1879-1888. [9] CAO X K, WANG Z P, ZHAO Y Y, et al. Scale aggregation network for accurate and efficient crowd counting[C]//European Conference on Computer Vision. Cham: Springer, 2018: 757-773. [10] LI Y H, ZHANG X F, CHEN D M. CSRNet: dilated convolutional neural networks for understanding the highly congested scenes[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City: IEEE, 2018: 1091-1100. [11] SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]//3rd International Conference on Learning Representations. San Diego: [s.n.], 2015: 1-14. [12] YU F, KOLTUN V. Multi-scale context aggregation by dilated convolutions[C]//Proceedings of the 4th International Conference on Learning Representations. Puerto Rico: ICLR, 2016: 1-13. [13] 田晟,张剑锋,张裕天,等. 基于扩张卷积金字塔网络的车道线检测算法[J]. 西南交通大学学报,2020,55(2): 386-392,416. doi: 10.3969/j.issn.0258-2724.20181026TIAN Sheng, ZHANG Jianfeng, ZHANG Yutian, et al. Lane detection algorithm based on dilated convolution pyramid network[J]. Journal of Southwest Jiaotong University, 2020, 55(2): 386-392,416. doi: 10.3969/j.issn.0258-2724.20181026 [14] 左静,巴玉林. 基于多尺度融合的深度人群计数算法[J]. 激光与光电子学进展,2020,57(24): 315-323.ZUO Jing, BA Yulin. Population-depth counting algorithm based on multiscale fusion[J]. Laser & Optoelectronics Progress, 2020, 57(24): 315-323. [15] CHEN X Y, BIN Y R, SANG N, et al. Scale pyramid network for crowd counting[C]//2019 IEEE Winter Conference on Applications of Computer Vision (WACV). Waikoloa: IEEE, 2019: 1941-1950. [16] SINDAGI V, PATEL V. Multi-level bottom-top and top-bottom feature fusion for crowd counting[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV). Seoul: IEEE, 2019: 1002-1012. [17] ZHOU Y, YANG J X, LI H R, et al. Adversarial learning for multiscale crowd counting under complex scenes[J]. IEEE Transactions on Cybernetics, 2021, 51(11): 5423-5432. doi: 10.1109/TCYB.2019.2956091 [18] JIANG X L, XIAO Z H, ZHANG B C, et al. Crowd counting and density estimation by trellis encoder-decoder networks[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach: IEEE, 2019: 6126-6135. [19] TIAN Y K, LEI Y M, ZHANG J P, et al. PaDNet: pan-density crowd counting[J]. IEEE Transactions on Image Processing, 2020, 29: 2714-2727. doi: 10.1109/TIP.2019.2952083 [20] LIU W Z, SALZMANN M, FUA P. Context-aware crowd counting[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach: IEEE, 2019: 5094-5103. [21] LI H, KONG W H, ZHANG S H. Effective crowd counting using multi-resolution context and image quality assessment-guided training[J]. Computer Vision and Image Understanding,2020,201:103065.1-103065.10. [22] IDREES H, SALEEMI I, SEIBERT C, et al. Multi-source multi-scale counting in extremely dense crowd images[C]//2013 IEEE Conference on Computer Vision and Pattern Recognition. Portland: IEEE, 2013: 2547-2554. [23] IDREES H, TAYYAB M, ATHREY K, et al. Composition loss for counting, density map estimation and localization in dense crowds[C]//European Conference on Computer Vision. Cham: Springer, 2018: 544-559. [24] WANG Q, GAO J Y, LIN W, et al. NWPU-crowd: a large-scale benchmark for crowd counting and localization[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(6): 2141-2149. doi: 10.1109/TPAMI.2020.3013269 [25] ZHAO H S, SHI J P, QI X J, et al. Pyramid scene parsing network[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu: IEEE, 2017: 6230-6239. [26] ZHANG Z L, ZHANG X Y, PENG C, et al. ExFuse: enhancing feature fusion for semantic segmentation[C]//European Conference on Computer Vision. Cham: Springer, 2018: 273-288. [27] 王银,王立德,邱霁. 基于DenseNet结构的轨道暗光环境实时增强算法[J]. 西南交通大学学报,2022,57(6): 1349-1357. doi: 10.3969/j.issn.0258-2724.20210199WANG Yin, WANG Lide, QIU Ji. Real-time enhancement algorithm based on DenseNet structure for railroad low-light environment[J]. Journal of Southwest Jiaotong University, 2022, 57(6): 1349-1357. doi: 10.3969/j.issn.0258-2724.20210199 [28] DAI F, LIU H, MA Y K, et al. Dense scale network for crowd counting[C]//Proceedings of the 2021 International Conference on Multimedia Retrieval. Taipei: ACM, 2021: 64-72. [29] WANG W X, LIU Q L, WANG W. Pyramid-dilated deep convolutional neural network for crowd counting[J]. Applied Intelligence, 2022, 52(2): 1825-1837. doi: 10.1007/s10489-021-02537-6 [30] WAN J, WANG Q Z, CHAN A B. Kernel-based density map generation for dense object counting[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(3): 1357-1370. doi: 10.1109/TPAMI.2020.3022878 [31] MA Z H, WEI X, HONG X P, et al. Bayesian loss for crowd count estimation with point supervision[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV). Seoul: IEEE, 2019: 6141-6150. [32] WANG X, LV R R, ZHAO Y, et al. Multi-scale context aggregation network with attention-guided for crowd counting[C]//2020 15th IEEE International Conference on Signal Processing (ICSP). Beijing: IEEE, 2020: 240-245. [33] LIANG L J, ZHAO H L, ZHOU F B, et al. SC2Net: scale-aware crowd counting network with pyramid dilated convolution[J]. Applied Intelligence, 2023, 53(5): 5146-5159. -

下载:

下载: