Lane Detection Algorithm Based on Dilated Convolution Pyramid Network

-

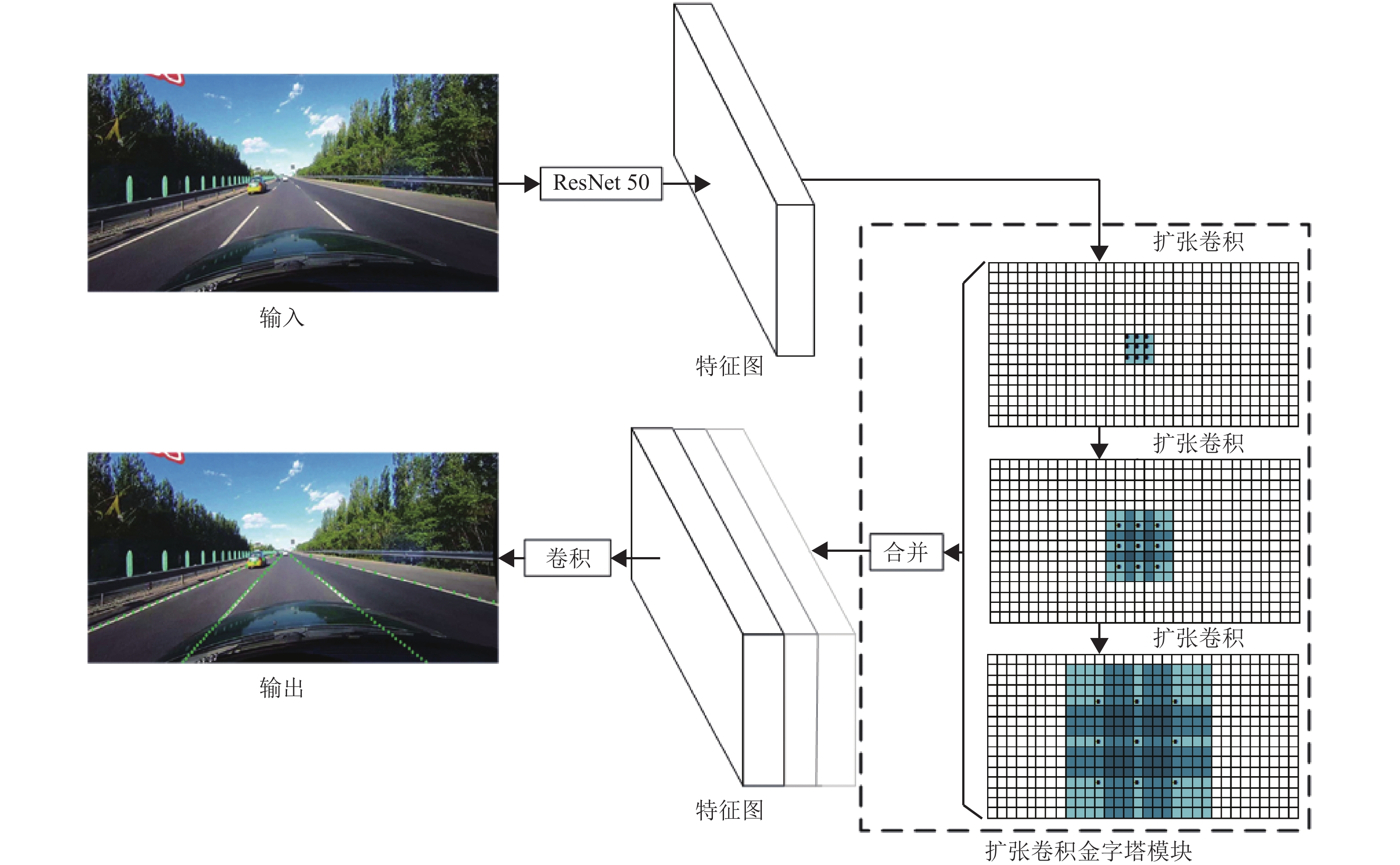

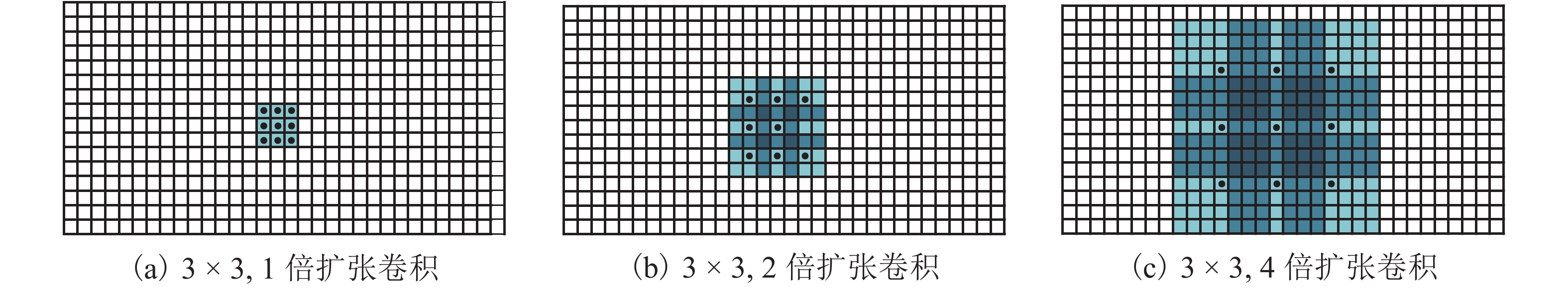

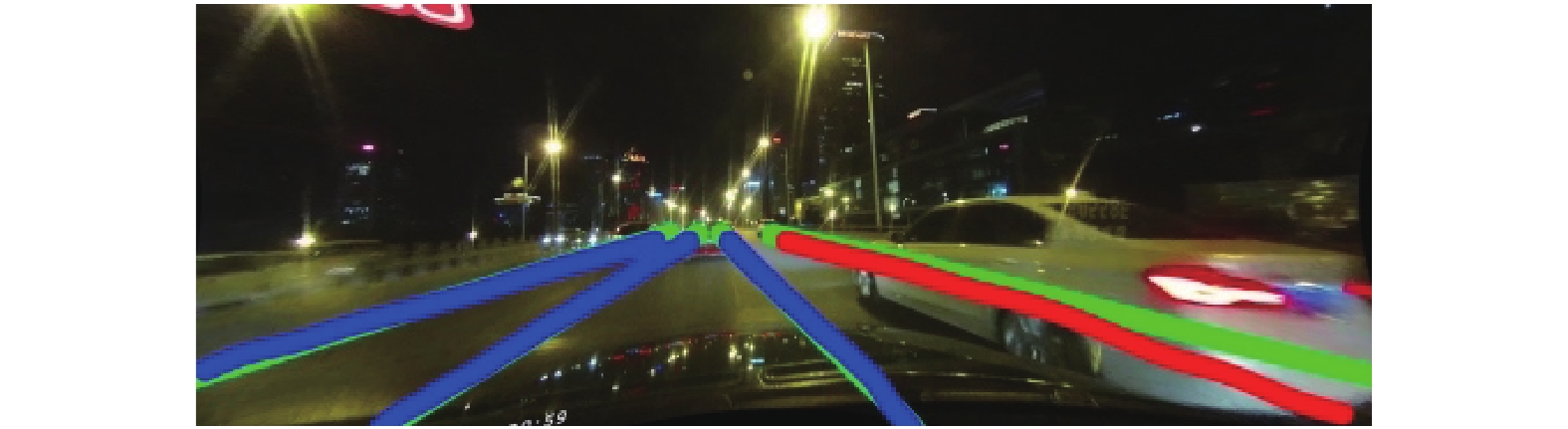

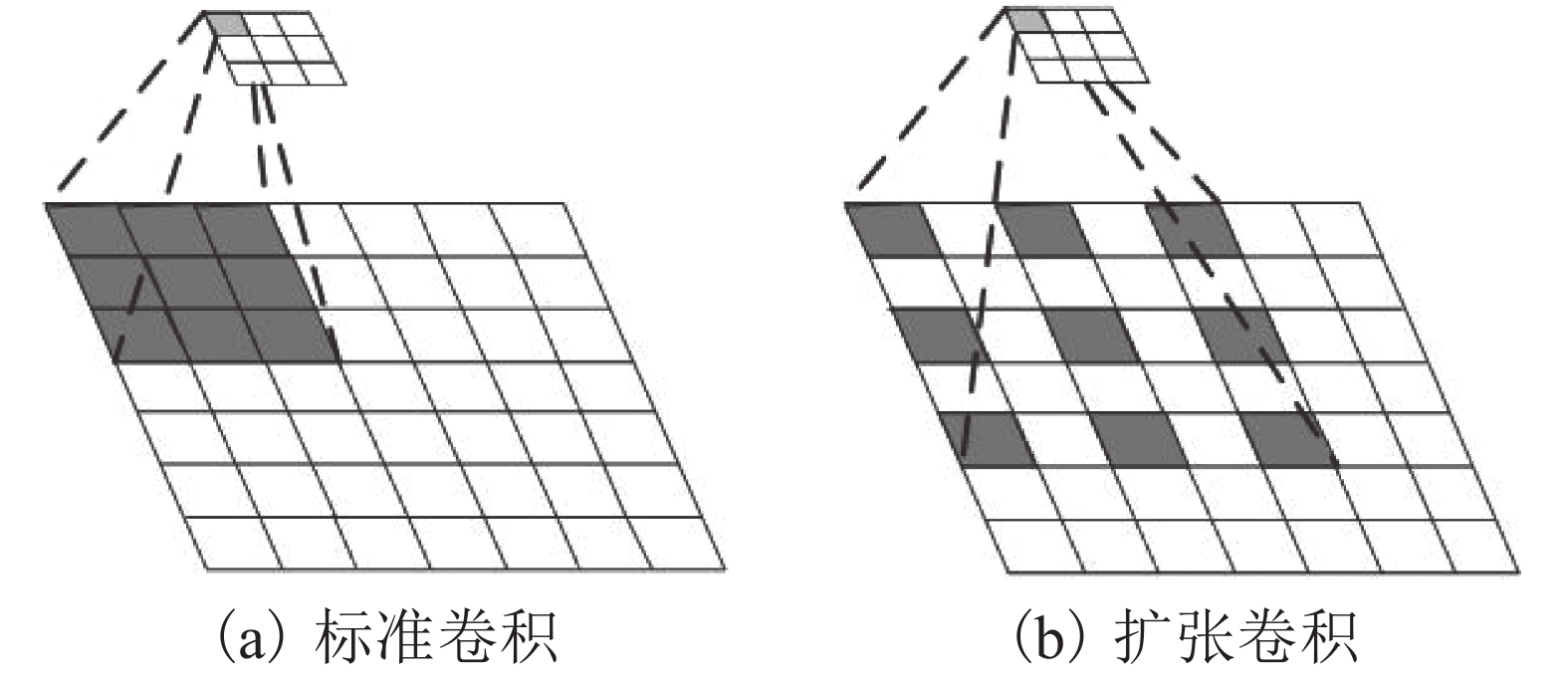

摘要: 为满足汽车高级驾驶辅助系统对车道线检测准确性和时效性的要求,采用改进的ResNet50网络作为基础模型提取局部车道线特征,利用扩张卷积能指数级扩大感受野的特点,设计了扩张卷积金字塔模块,用以完整提取不同尺度的车道线特征,提出“锚点栅格”的思想,将输出划分为一组栅格,对每个栅格进行分类和回归分析,经过非极大值抑制等后处理,最终输出车道线标记点集. 结果表明:在CULane多场景数据集里对模型进行测试,在交并比阈值取为0.3的评估条件下其综合评估指标F-measure达到78.6%,检测速率达到40帧/s,在评估指标相近的情况下具有远高于空间卷积神经网络(spatial convolutional neural networks,SCNN)模型的检测速率,并在眩光、弯道等困难场景中的检测效果优于SCNN.Abstract: In order to meet the accuracy and timeliness requirements of advanced driver-assistance system in lane detection, the improved ResNet50 network as the basic model to extract the features of the local laneline is proposed. Given that the dilated convolution can exponentially expand the receptive field, the dilated convolutional pyramid module is designed to completely extract the laneline features on different scales. The idea of anchor grid is proposed, by which the output is divided into a set of grids, and each grid is classified and analyzed by regression. After non-maximum suppression and other post-processes, a set of laneline marking points are output by the model. Experimental results show that if the model is tested with CULane multi-scene dataset and the intersection-over-union (IoU) threshold is 0.3, the comprehensive evaluation index F-measure reaches 78.6% and the detection rate reaches 40 frames per second. With similar evaluation indexes, the detection rate of the proposed model is much higher than that of the spatial convolutional neural networks (SCNN) model, and its detection performance in difficult scenes such as dazzle light and curve is more desirable.

-

Key words:

- deep learning /

- computer vision /

- lane detection

-

表 1 两种模型在CULane上的评估结果

Table 1. Evaluation results of two models on CULane

% 场景 SCNN 扩张卷积金字塔网络 Pprecision Precall PF-measure Pprecision Precall PF-measure 标准 95.6/90.8 95.5/90.4 95.7/90.6 92.4/87.8 94.2/89.5 93.3/88.7 拥挤 80.8/70.4 79.1/68.9 79.9/69.7 78.9/70.5 78.5/70.1 78.7/70.3 眩光 74.6/60.4 70.8/56.6 72.6/58.4 73.0/62.6 74.4/63.8 73.7/63.2 阴影 81.7/67.3 80.9/66.7 81.3/67.0 76.6/68.0 78.8/70.0 77.7/69.0 无标线 60.3/45.7 54.6/41.3 57.3/43.4 56.6/46.4 54.9/45.0 55.7/45.7 箭头 92.5/85.5 89.5/82.7 91.0/84.1 87.2/82.3 86.8/81.9 87.0/82.1 弯道 84.6/70.2 72.0/59.7 77.8/64.5 83.6/68.9 84.1/69.3 83.8/69.1 夜晚 78.3/67.4 75.2/64.8 76.7/66.1 73.4/64.6 74.8/65.8 74.1/65.2 平均值 81.4/72.1 80.3/71.1 80.8/71.6 77.6/70.2 79.8/72.2 78.6/71.2 表 2 两种模型的检测效果

Table 2. Detection effect of the two models

项目 眩光 阴影 无标线 交叉路口 真实标签

SCNN 扩张卷积金字塔

网络 -

STERNLUND S, STRANDROTH J, RIZZI M, et al. The effectiveness of lane departure warning systems−a reduction in real-world passenger car injury crashes[J]. Traffic Injury Prevention, 2018, 18(2): 225-229. 钱基德,陈斌,钱基业,等. 基于感兴趣区域模型的车道线快速检测算法[J]. 电子科技大学学报,2018,47(3): 356-361. doi: 10.3969/j.issn.1001-0548.2018.03.006QIAN Jide, CHEN Bin, QIAN Jiye, et al. Fast lane detection algorithm based on region of interest model[J]. Journal of University of Electronic Science and Technology of China, 2018, 47(3): 356-361. doi: 10.3969/j.issn.1001-0548.2018.03.006 王旭宸,卢欣辰,张恒胜,等. 一种基于平行坐标系的车道线检测算法[J]. 电子科技大学学报,2018,47(3): 362-367. doi: 10.3969/j.issn.1001-0548.2018.03.007WANG Xuhuan, LU Xinchen, ZHANG Hengsheng, et al. A lane detection method based on parallel coordinate system[J]. Journal of University of Electronic Science and Technology of China, 2018, 47(3): 362-367. doi: 10.3969/j.issn.1001-0548.2018.03.007 SON J, YOO H, KIM S, et al. Real-time illumination invariant lane detection for lane departure warning system[J]. Expert Systems with Applications, 2015, 42(4): 1816-1824. doi: 10.1016/j.eswa.2014.10.024 NEVEN D, DE BRABANDERE B, GEORGOULIS S, et al. Towards end-to-end lane detection: an instance segmentation approach[C]//Proceedings of the 2018 IEEE Intelligent Vehicles Symposium. Suzhou: IEEE, 2018: 286-291. 徐国晟,张伟伟,吴训成. 基于卷积神经网络的车道线语义分割算法[J]. 电子测量与仪器学报,2018,32(7): 89-94.XU Guosheng, ZHANG Weiwei, WU Xuncheng. Laneline semantic segmentation algorithm based on convolutional neural network[J]. Journal of Electronic Measurement and Instrumentation, 2018, 32(7): 89-94. GURGHIAN A, KODURI T, BAILUR S V, et al. Deeplanes: end-to-end lane position estimation using deep neural networks[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Las Vegas: IEEE, 2016: 38-45. NAROTE S P, BHUJBAL P N, NAROTE A S, et al. A review of recent advances in lane detection and departure warning system[J]. Pattern Recognition, 2018, 73: 216-234. doi: 10.1016/j.patcog.2017.08.014 HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition[C]//Proceedings of the Computer Vision and Pattern Recognition. Las Vegas: IEEE, 2016: 770-778. ZHOU B, ZHAO H, PUIG X, et al. Semantic understanding of scenes through the ADE20K dataset[J]. International Journal of Computer Vision, 2019, 127: 302-321. doi: 10.1007/s11263-018-1140-0 YU F, KOLTUN V. Multi-scale context aggregation by dilated convolutions[C]//Proceedings of the 4th International Conference on Learning Representations. Puerto Rico: ICLR, 2016: 1-13. CHEN L C, PAPANDREOU G, KOKKINOS I, et al. DeepLab:semantic image segmentation with deep convolutional nets,atrous convolution,and fully connected CRFs[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(4): 834-848. doi: 10.1109/TPAMI.2017.2699184 PAN X, SHI J, LUO P, et al. Spatial as deep: spatial CNN for traffic scene understanding[C]//Proceedings of the Artificial Intelligence. New Orleans: AAAI. 2018: 7276-7283. REDMON J, FARHADI A. YOLO9000: better, faster, stronger[C]//Proceedings of the Computer Vision and Pattern Recognition. Honolulu: IEEE, 2017: 7263-7271. GIRSHICK R. Fast R-CNN[C]//Proceedings of the Computer Vision. Santiago: IEEE, 2015: 1440-1448. -

下载:

下载: